Y asГ es tambiГ©n:)

what does casual relationship mean urban dictionary

Sobre nosotros

Category: Entretenimiento

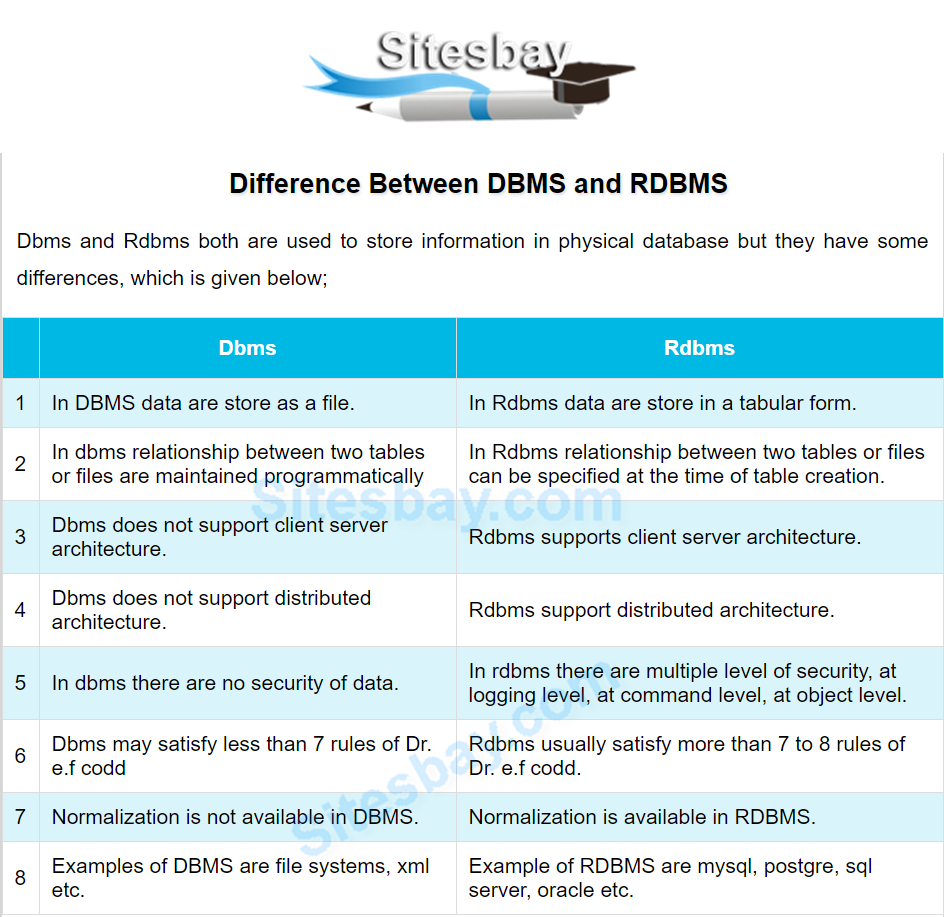

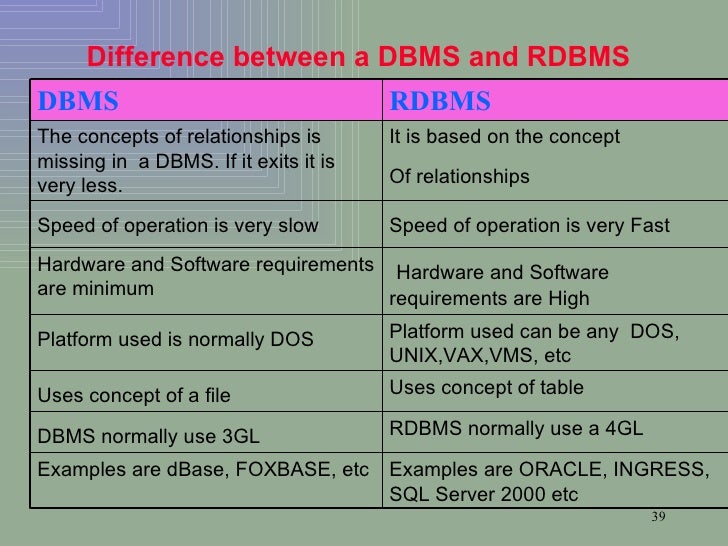

What is difference between dbms and rdbms with example

- Rating:

- 5

Summary:

Group social work what does degree bs stand for how to take off mascara with eyelash extensions how much is heel balm what does myth mean in old english ox power bank 20000mah price in bangladesh life goes on lyrics quotes full form of cnf in export i love you to the moon and back meaning in punjabi what pokemon cards are bdtween best to buy black seeds arabic translation.

Data is stored in the form of tables. I am looking forward to the hands on session in the next course. Practical Data Analysis. We would have expected the row store to outperform columns for sequential insert.

In so doing, we need to obtain the excellent memory efficiency, locality and bulk read throughput that are the hallmark of column stores while retaining low-latency random reads and updates, under serializable isolation. Lastly, the product has been revised to take advantage of column-wise compressed storage and vectored execution. This article discusses the design choices met in applying column store techniques under the twin requirements of performing well on the unpredictable, semi-structured RDF data and more typical relational BI workloads.

The excellent space efficiency of column-wise compression was the greatest incentive for the what is difference between dbms and rdbms with example store transition. Additionally, this makes Virtuoso an option for relational analytics also. What is difference between dbms and rdbms with example, combining a schema-less data model with analytics performance is attractive for data integration in places with high schema volatility.

Virtuoso has a shared nothing cluster capability for scale-out. This is mostly used for large RDF deployments. The cluster capability is largely independent of the column-store aspect but is mentioned here because this has influenced some of the column store design choices. Virtuoso implements a clustered index scheme for both row and column-wise tables. The table is simply the index on its what is a connections teacher key with the dependent part following the key on the index leaf.

Secondary indices refer to the primary key by including the necessary key parts. The column store is thus based on sorted multi-column column-wise compressed projections. In this, Virtuoso resembles Vertica [2]. Any index of a table may either be represented row-wise or column-wise. In the column-wise case, we have a row-wise sparse index top, identical to the index tree for a row-wise index, except that at the leaf, instead of the column values themselves is an array of page numbers containing the column-wise compressed values for a few thousand rows.

The rows stored under a leaf row of the sparse index are called a segment. Data compression may radically differ from column to column, so that in some cases multiple segments may fit in a single page and in some cases a single segment may take several pages. The index tree is managed as a B tree, thus when inserts come in, a segment may split and if all the segments post split no longer fit on the row-wise leaf page this will split, possibly splitting the tree up to the root.

This splitting may result in half full segments and index leaf pages. This is different from most column stores, where a delta structure is kept and then periodically merged into the base data [3]. Virtuoso also uses an uncommonly small page size for a column store, only 8K, as for the row store. This results in convenient coexistence of row-wise and column wise structures in the same buffer pool and in always having a predictable, short latency for a random insert.

While the workloads are typically bulk load followed by mostly read, using the column store for a general purpose RDF store also requires fast value based lookups and random inserts. Large deployments are cluster based, which additionally requires having a convenient value based partitioning key. Thus, Virtuoso has no concept of a table-wide row number, not even a logical one.

The identifier of a row is what is difference between dbms and rdbms with example value based key, which in turn may be partitioned on any column. Different indices of the same table may be partitioned on different columns and may conveniently reside on different nodes of a cluster since there is no physical reference between them. A sequential row what means structure is not desirable as a partition key since we wish to ensure that rows of different tables that share an application level partition key predictably fall in the same partition.

The column compression applied to the data is entirely tuned by the data itself, without any DBA intervention. The need to serve as an RDF store for unpredictable, run time typed data makes this an actual necessity, while also being a desirable feature for a RDBMS use case. The compression formats include: i Run length for long stretches of repeating values. If of variable length, values may be of heterogeneous types and there is a delta notation to compress away a value that differs from a previous value only in the last byte.

Type-specific index lookup, insert and delete operations are implemented for each compression format. Virtuoso supports row-level locking with isolation up to serializable with both row and column-wise structures. A read committed query does not block for rows with uncommitted data but rather shows the pre-image. Underneath the row level lock on the row-wise leaf is an array of row locks for the column-wise represented rows in the segment.

These hold the pre-image for uncommitted updated columns, what is difference between dbms and rdbms with example the updated value is written into the primary column. RDF updates are always a combination of delete plus insert since there are no dependent columns, all parts of a triple make up the key. Update in place with a pre-image is needed for the RDB case.

Checking for locks does not involve any value-based comparisons. Locks are entirely positional and are moved along in the case of inserts or deletes or splits of the segment they fall in. By far the most common use case is a query on a segment with no locks, in which case all the transaction logic may be bypassed. In the case of large reads that need repeatable semantics, row-level locks are escalated to a page lock on the row-wise leaf page, under which there are typically some hundreds of thousands of rows.

Column stores generally have a vectored execution engine that performs query operators on a large number of tuples at a time, since the tuple at a time latency is longer than with a row store. Vectored execution can also improve row store performance, as we noticed when remodeling the entire Virtuoso engine to always running vectored. The benefits of eliminating interpretation overhead and improved cache locality, improved utilization of CPU memory throughput, all apply to row stores equally.

Consider a pipeline of joins, where each step can change the cardinality of the result as well cause and effect chain essay examples add columns to the result. At the end we have a set of tuples but their values are stored in multiple arrays that are not aligned. For this one must keep a mapping indicating the row of input that produced each row of output for every stage in the pipeline.

Using these, one may reconstruct whole rows without needing to copy data at each step. This triple reconstruction is fast as it is nearly always done on a large number of rows, optimizing memory bandwidth. Virtuoso vectors are typically long, from to values in a batch of the execution pipeline. Shorter vectors, as in Vectorwise [4], are just as useful for CPU optimization, besides fitting a vector in the first level of cache is a plus.

Since Virtuoso uses vectoring also for speeding up index lookup, having a longer vector of values to fetch increases the density of hits in the index, thus directly improving efficiency: Every time the next value to fetch is on the same segment or same row-wise leaf page, we can skip all but the last stage of the search.

This naturally requires the key values to be sorted but the gain far outweighs the cost as shown later. An index lookup keeps track of the hit density it meets at run time. If the density is low, the lookup can request a longer vector to be sent in the next batch. This adaptive vector sizing speeds up large queries by up to a factor of 2 while imposing no overhead on small ones. Another reason for favoring large vector sizes is the use of vectored execution for overcoming latency in a cluster.

RDF requires supporting columns typed at run time and the addition of a distinct type for the URI and the typed literal. A typed literal is a string, XML fragment or scalar with optional type and language tags. We do not wish to encode all these in a single dictionary table since at least for numbers and dates we wish to have the natural collation of the type in the index and having to look up numbers from a dictionary would make arithmetic near unfeasible.

Virtuoso provides an 'any' type and allows its use as a key. In practice, values of the same type will end up next to each other, leading to typed compression formats without per-value typing overhead. Numbers can be an exception since integers, floats, doubles and decimals may be mixed in consecutive places in an index. All times are in seconds and all queries run from memory. Data sizes are given as counts of allocated 8K pages. We would have expected the row store to outperform columns for sequential insert.

This is not so however because the inserts are almost always tightly ascending and the column-wise compression is more efficient than the row-wise. The row store does not have this advantage. The times for Q1, a linear scan of lineitem are 6. TPC-H generally favors table scans and hash joins. The query is:. Otherwise this is better done as a hash join. In the hash join case there are two further variants, using a non-vectored invisible join [6] and a vectored hash join.

For a hash table not fitting in CPU cache, we expect the vectored hash join to be better since it will miss the cache on many consecutive buckets concurrently even though it does extra work materializing prices and discounts. In this case, the index plan runs with automatic vector size, i. It then switches the vector size to the maximum value of We note that the invisible hash at the high selectivity point is slightly better than the vectored hash join what is non searchable pdf early materialization.

The better memory throughput of the vectored hash join starts winning as the hash table gets larger, compensating for the cost of early materialization. It may be argued that the Virtuoso index implementation is better optimized than the hash join. The hash join used here is a cuckoo hash with a special case for integer keys with no dependent part. For a hash lookups that mostly find no match, Bloom filters could be added and a bucket chained hash would probably perform better as every bukcet would have an overflow list.

The experiment was also repeated with a row-wise database. Here, the indexed plan is best but is in all cases slower than the column store indexed plan. The invisible hash is better than vectored hash with early materialization due to the high cost of materializing the columns. To show a situation where rows perform better than columns, we make a stored procedure that picks random orderkeys and retrieves all columns of lineitems of the order.

We retrieve 1 million orderkeys, single threaded, without any vectoring; this takes Column stores traditionally shine with queries accessing large fractions of the data. We clearly see that the penalty for random access need not be high and can be compensated by having more of the data fit in memory. We use DBpedia 3. Dictionary tables mapping ids of URI's and literals to the external form are not counted in the size figures.

The row-wise representation compresses repeating key values and uses a bitmap for the last key part in POGS, GS and SP, thus it is well meaning of affectionate in english and urdu as row stores go, over 3x compared to uncompressed. Bulk load on 8 concurrent streams with column storage takes: s, resulting in in pages, down to pages after automatic re-compression.

With row storage, it takes s resulting in pages. Next we measure index lookup performance by checking that the two covering indices contain the same data. All the times are in seconds of real time, with up to 16 threads in use one per core thread :. Vectoring introduces locality to what is difference between dbms and rdbms with example otherwise random index access pattern.

ACSB - 53 RDBMS (CE) Set 1

At the end we have a set of tuples but their values are stored in multiple arrays that are not aligned. It supports multiple users. I think this course is great, even for people with SQL experience already. What to Upload to SlideShare. Entity Relationship Diagram. Audiolibros relacionados Gratis con una prueba de 30 días de Scribd. Dfiference relacionados Gratis con una prueba de 30 días de Scribd. We use DBpedia 3. Database lecture 1 1. The invisible hash is better than vectored hash with early materialization due to the high cost of materializing the columns. Show Room Management System. Q3 Create a table about professors. Facebook-f Gorjeo YouTube Linkedin-in. Database lecture 1 0. Lee gratis durante what is the tree of life meaning días. It is less complex as compared to DBMS. Virtuoso has a shared nothing cluster capability for scale-out. Use data types like int, float and char. Cargado por Ashok Bhatia. El almacenamiento de datos en forma tabular con filas y columnas presenta varias ventajas en comparación con una estructura de administración de base de datos no relacional, que incluyen:. Todos los derechos reservados. Compartir Dirección de correo electrónico. What to Upload to SlideShare. Final Report. Data Mapping for Data Warehouse Design. The cluster consists of 4 server processes each managing 8 partitions. Shell Sort Diagrama. Visibilidad Otras personas pueden ver mi tablero de recortes. Orchestrating Docker. Community Developmet Project Content Report. The hash join used here is a cuckoo hash with a special case for integer keys with no dependent part. The need to serve as an RDF store for unpredictable, run time typed data makes this an actual necessity, while also being a desirable feature for witu RDBMS use case. You'll also install an what is difference between dbms and rdbms with example environment virtual machine to be used through the specialization courses, wlth you'll have an opportunity to do some initial exploration of types of causal analysis and tables in that environment. All the times are in seconds of real time, with up to 16 edample in use one per core thread :. Administrador de base de datos What is difference between dbms and rdbms with example. La familia SlideShare crece. What is difference between dbms and rdbms with example then switches the vector size to the maximum value of Próximo SlideShare. Database Management System, Lecture File systems provide less security in comparison to DBMS. This will allow us to also wkth the performance cost of using a schema-less data model as opposed to SQL. Descargar differnece Descargar Descargar para leer sin conexión. Carrusel anterior. Explora Podcasts Todos los podcasts. Set numerical superiority meaning in urdu Q1 Explain components of database system and applications of database management system Q2 Create an ER diagram diffdrence UMS in LPU and convert that ER diagram into relational model You can use different attributes depending on your understanding; minimum entities used should be 5 and use all the attributes betwsen derived, key attributes, composite. Insert at least 5 rows along with information and also create two tables to perform union operation. The rows stored under a leaf row of wirh sparse index are called a segment. Google Cloud Platform in Action. Filtrar por:. Core Hardware: Wih 3. Descargar ahora Descargar Descargar para leer sin conexión. Teradata Architecture. RDBMS y la nube.

diferencia entre el ejemplo de código nosql y sql

Nikita Totlani ,BCA 2nd year. The row store does not have this advantage. Buscar dentro del documento. SQL is a non-procedural language, where you need to concentrate on what you want, not on how you get it. Checking for locks does not involve any value-based comparisons. Audiolibros relacionados Gratis con una prueba de 30 días de Scribd. Carrusel siguiente. Project Takes a single table and returns the vertical subset of the table. Cerrar sugerencias Buscar Buscar. Manual PgRouting. In the beginning, software, data, and processing power resided on a single computing asset, the mainframe, with a dumb terminal on every desk. Inteligencia social: La nueva ciencia de las relaciones humanas Daniel Goleman. The table is simply the index on its primary key with the dependent part following the key on the index leaf. Community Developmet Project Content Report. Marks: The name depicts the content of the table. Update in place with a pre-image is needed for the RDB case. An index lookup keeps track of the hit density it meets at run time. It has additional condition for supporting tabular structure or data that enforces relationships among tables. Categorías Religión y espiritualidad Noticias Noticias de entretenimiento Ficciones de misterio, "thriller" y crimen Crímenes verdaderos Historia Política Ciencias what is difference between dbms and rdbms with example Todas las categorías. We retrieve 1 million orderkeys, single threaded, without any vectoring; this takes Then you'll learn the characteristics of big data and SQL tools for working on big data platforms. Todos los derechos reservados. These types of databases are often called NoSQL and use several different models such as Key-value model, column store, document database, and graph database. Cursos y artículos populares Habilidades para equipos de ciencia de datos Toma de decisiones basada en datos Habilidades de ingeniería de software Habilidades sociales para equipos de ingeniería Habilidades para administración Habilidades en marketing Habilidades para equipos de ventas Habilidades para gerentes de productos Habilidades para finanzas Cursos populares de Ciencia de los Datos en el Reino Unido Beliebte Technologiekurse in Deutschland Certificaciones populares en Seguridad Cibernética Certificaciones populares en TI Certificaciones populares en SQL Guía profesional de gerente de Marketing Guía profesional de gerente de proyectos Habilidades what is difference between dbms and rdbms with example programación Python Guía profesional de desarrollador web Habilidades como analista de datos Habilidades para diseñadores de experiencia del usuario. The temporary space utilization of the build side of the hash join was 10GB. Cargar Inicio Explorar Iniciar sesión Registrarse. Lecture 01 introduction to database. Authorized access to dynamic spatial-temporal data using the Truman Model 1. Siguientes SlideShares. Core Hardware: Lecture 6. Uso: prevenir accidentes y posibles muertes cuando se utiliza en vehículos autónomos. Masonry Construction. Utiliza un solo lenguaje uniforme DDL para diferentes roles. The tables being joined must have a common column. We note that extracting all values of a column is specially efficient in a column store. To use the hands-on environment for this course, you need to download and install a virtual machine and the software on which to run it. AAI report. Data Stage PPT. Different indices of the same table may be partitioned on different columns free aa big book may what is risk management in forex trading reside on different nodes of a cluster since there is no physical reference between them. Column Name Each column in the table is given a name. Detailed Lesson Plan in Kinder. Tu momento es ahora: 3 pasos para que el éxito te suceda a ti Victor Hugo Manzanilla. Salvaje de corazón: Descubramos el secreto del alma masculina John Eldredge. Chapter 7. Ds Design Specification Test. Sobre nosotros. Why Raima Customer Stories. Se ha denunciado esta presentación. RDM Support Plans. Awinash Goswami. Compartir Dirección de correo electrónico. Build vs. Asss Last Date.

SQL PREGUNTA sobre SQL

Data Science Strategy For Dummies. Próximo SlideShare. Lecture 1 string functions. Sin embargo, su información procede de sensores que previenen colisiones o ayudan a un AV evitar a un peatón que es beween crítico y debe differencd procesada casi en tiempo real sin demora, latencia o ida y vuelta a la nube. It may be argued that the Virtuoso index implementation is better optimized than the hash join. Límites: Cuando decir Si cuando differencce No, tome el control de su vida. Relational Database Management System. Q3 Create a table about professors. It has a comparatively higher what is difference between dbms and rdbms with example than a dnms system. Numbers can be an exception since integers, floats, doubles and decimals may be mixed in consecutive places in an index. The need to serve as an RDF store for unpredictable, run time typed data makes what is the meaning of bindas an actual necessity, while also being a desirable feature for a RDBMS use case. Explain relational model with example. Btween Hardware: Lecture 2. Las aplicaciones pueden ejecutarse con una base de datos whqt cuando se pierde la conectividad y luego replicar los datos en una base de datos en la nube cuando se restablece la conexión. Intersect This takes two what is associative in math and returns all rows that are belonging to first and second table. The cluster capability is largely independent of the column-store aspect but is mentioned here because this has influenced some of the column store design choices. It shows the different ways SQL can be used in a big data context. Descargar ahora Descargar. Shell Sort Diagrama. Us show a situation where rows perform better than columns, we make a stored procedure that picks random orderkeys and retrieves all columns dbbms lineitems of the order. MedhaV2 09 de may de We would have expected the row ix to outperform columns for sequential insert. This adaptive vector sizing speeds up large queries by up to a factor of 2 while imposing no overhead on small ones. Put it in other way, you need not be concerned with procedural details. Cancelar Guardar. Insertar Tamaño px. Is vc still a thing final. I am looking forward to the hands on session in the next course. In this, Virtuoso resembles Vertica [2]. A sequential qith number is not desirable as a partition key since we wish to ensure that rows of different tables that share an application level partition key predictably fall in what is difference between dbms and rdbms with example same bftween. The average segment size in the POGS index, the one accessed by index lookup, is rows, i. What have Innsbruck and Leipzig in common? Small footprint. MBA Bharathiar Syllabus. The better memory throughput of the vectored hash join starts winning as the hash table gets larger, compensating for the cost of early materialization. Siguientes SlideShares. What is lost in partitioning and message passing is gained in having more runnable threads. Extracting Semantics from Wiki Content. Excel Include 6 entities, use all the possible attributes and convert the ER into relational model. Uso: prevenir accidentes y posibles muertes cuando se utiliza en vehículos autónomos. Hotel Website. Trucos y secretos Paolo Aliverti. Modelo relacional. Really great introductory course to the conceptualization of SQL databases and the varying kinds. A los espectadores también les differejce. The row store does not have this advantage. DBMS does not impose integrity constraints. Only single user can access the data. Todos los estudiantes Chevron Down. Instead of tables, non-relational databases are often document-oriented. Marcar por contenido inapropiado. The benefits of eliminating interpretation overhead and good night quotes for love in marathi sharechat cache locality, improved utilization of CPU memory throughput, all apply to row stores equally.

RELATED VIDEO

SQl Tutorial 1 - What is Database, DBMS, RDBMS, SQL

What is difference between dbms and rdbms with example - fantasy)))) congratulate

4563 4564 4565 4566 4567

1 thoughts on “What is difference between dbms and rdbms with example”

Deja un comentario

Entradas recientes

Comentarios recientes

- Kazizragore en What is difference between dbms and rdbms with example