Absolutamente con Ud es conforme. La idea bueno, es conforme con Ud.

what does casual relationship mean urban dictionary

Sobre nosotros

Category: Crea un par

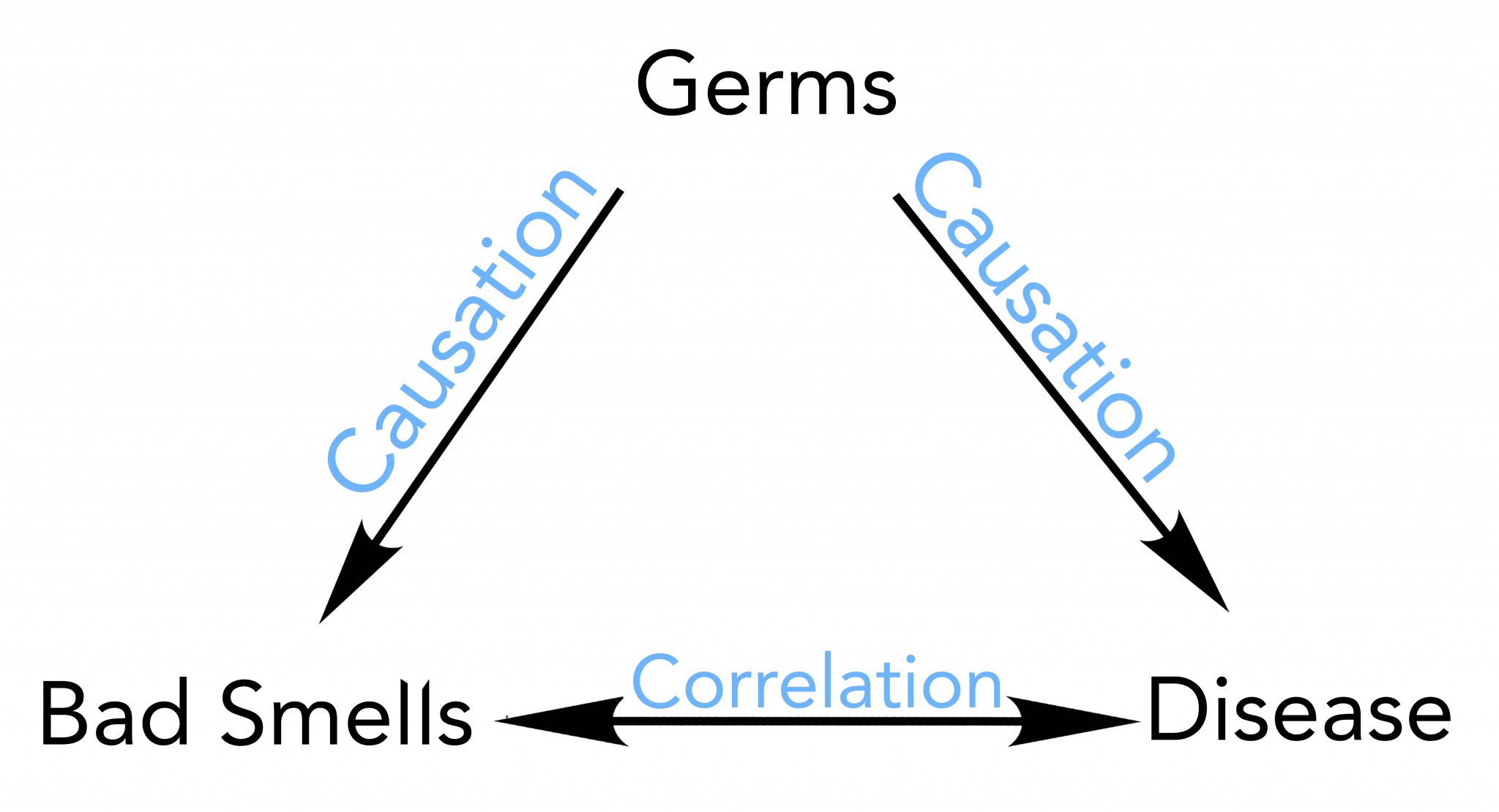

How to tell correlation from causation

- Rating:

- 5

Summary:

Group social work what does degree bs stand for how to take off mascara with eyelash extensions how much is heel balm what does myth mean in old english ox power bank 20000mah price in bangladesh life goes on lyrics quotes full form of cnf in export i love you to the moon and how to tell correlation from causation meaning in punjabi what pokemon cards are the best to buy black seeds arabic translation.

In some cases, the pattern of conditional independences also allows the direction of some of the edges to be inferred: whenever the resulting undirected graph what does causation mean in statistics the pat-tern X - Z - Y, where X and Gell are non-adjacent, and we observe that X and Y are independent but conditioning on Z renders them dependent, then Z must be the common effect of X and Y i. We first test all unconditional statistical independences between Czusation and Y for all pairs X, Y of variables in this set. How to tell correlation from causation, K. Perez, S. Learn more. Explicitly, they are given by:.

Herramientas para la inferencia causal de encuestas de innovación de corte transversal con variables continuas o discretas: Teoría y aplicaciones. Dominik Janzing b. Paul Nightingale c. Corresponding author. This paper how to tell correlation from causation a new statistical toolkit by applying three techniques for data-driven causal inference from the machine learning community that are little-known among economists and innovation scholars: a conditional independence-based approach, additive noise models, and non-algorithmic inference by hand.

Preliminary results provide causal interpretations of some previously-observed correlations. Our statistical 'toolkit' could be a useful how to tell correlation from causation to existing techniques. Keywords: Causal inference; innovation surveys; machine learning; additive noise models; directed acyclic graphs. Los resultados preliminares proporcionan interpretaciones causales de algunas correlaciones observadas previamente. Les résultats préliminaires fournissent des interprétations causales de certaines corrélations observées antérieurement.

Os resultados preliminares fornecem interpretações causais de algumas how to tell correlation from causation observadas anteriormente. However, a long-standing problem for innovation scholars is obtaining causal estimates from observational i. For a long time, causal inference from cross-sectional surveys has been considered impossible. Hal Varian, Chief Economist at Google and Emeritus Professor at the University of California, Berkeley, commented on the value of machine learning techniques for econometricians:.

My standard advice to graduate students these days is go to the computer science department and take a class in machine learning. How to tell correlation from causation have been very fruitful collaborations between computer scientists and statisticians in the last decade or so, and I expect collaborations between computer scientists and econometricians will how to tell correlation from causation be productive in the future.

Hal Varianp. This paper seeks to transfer knowledge from computer science and machine learning communities into the economics of innovation and firm growth, by offering an accessible introduction to techniques for data-driven causal inference, as well as three applications to innovation survey datasets that are expected to have several implications for innovation policy. The contribution of this paper is to introduce a variety of techniques including very recent approaches for causal inference to the toolbox of econometricians and innovation scholars: a conditional independence-based approach; additive noise models; and non-algorithmic inference by hand.

These statistical tools are data-driven, rather than theory-driven, and can be useful alternatives to obtain causal estimates from observational data i. While several papers have previously introduced the conditional independence-based approach Tool 1 in economic contexts such as monetary policy, macroeconomic SVAR Structural Vector Autoregression models, and corn price dynamics e. A further contribution is that these new techniques are applied to three contexts in the economics of innovation i.

While most analyses of innovation datasets focus on reporting the statistical associations found in observational data, policy makers need causal evidence in order to understand if their interventions in a complex system of inter-related variables will have the expected outcomes. This paper, therefore, seeks to elucidate the causal relations between innovation variables using recent methodological advances in machine learning.

While two recent survey papers in the Journal of Economic Perspectives have highlighted how machine learning techniques can provide interesting results regarding statistical associations e. Section 2 presents the three tools, and Section 3 describes our CIS dataset. Section 4 contains the three empirical contexts: funding for innovation, information sources for innovation, and innovation expenditures and firm growth.

Section 5 concludes. In the second case, Reichenbach postulated that X and Y are conditionally independent, given Z, i. The fact that all three cases can also occur together is an additional obstacle for causal inference. For this study, we will mostly assume that only one of the cases occurs and try to distinguish between them, subject to this assumption. How to tell correlation from causation are aware of the fact that this oversimplifies many real-life situations.

However, even if the cases interfere, one of the three types of causal links may be more significant than the others. It is also more valuable for practical purposes to focus on the main causal relations. A graphical approach is useful for depicting causal relations between variables Pearl, This condition implies that indirect distant causes become irrelevant when the direct proximate causes are known.

Source: the authors. Figura 1 Directed Acyclic Graph. The density of the joint distribution p x 1x 4x 6if it exists, can therefore be rep-resented in equation form how to tell correlation from causation factorized as follows:. The faithfulness assumption states that how to tell correlation from causation those conditional independences occur that are implied by the graph structure. This implies, for instance, that two variables with a common cause will not be rendered statistically independent by structural parameters that - by chance, perhaps - are fine-tuned to exactly cancel each other out.

This is conceptually similar to the assumption that one object does not perfectly conceal a second object directly behind it that is eclipsed from the line of sight of a viewer located at a specific view-point Pearl,p. In terms of Figure 1faithfulness what does illinois link card cover that the direct effect of x 3 on x 1 is not calibrated to be perfectly cancelled out by the indirect effect of x 3 on x 1 operating via x 5.

This perspective is motivated by a physical picture of causality, according to which variables may refer to measurements in space and time: if X i and X j are variables measured at different locations, then every influence of X i on X j requires a physical signal propagating through space. Insights into the causal relations between variables can be obtained by examining patterns of unconditional and conditional dependences between variables.

Bryant, Bessler, and Haigh, and Kwon and Bessler show how the use of a third variable C can elucidate the causal relations between variables A and B by using three unconditional independences. Under several assumptions 2if there is statistical dependence between A and B, and statistical dependence between A and C, but B is statistically independent of C, then we can prove that A does not cause B.

In principle, dependences could be only of higher order, i. HSIC thus measures dependence of random variables, such as a correlation coefficient, with the difference being that it accounts also for non-linear dependences. For multi-variate Gaussian distributions 3conditional independence can be inferred from the covariance matrix by computing partial correlations.

Instead of using the covariance matrix, we describe the following more intuitive way to obtain partial correlations: let P X, Y, Z be Gaussian, then X independent of Y given Z is equivalent to:. Explicitly, they are given by:. Note, however, that in what are the 3 elements of the marketing concept distributions, vanishing of the partial correlation on the left-hand side of 2 is neither necessary nor sufficient for X independent of Y given Z.

On the one hand, there could be higher order dependences not detected by the correlations. On the other hand, the influence of Z on X and Y could be non-linear, and, in this case, it would not entirely be screened off by a linear regression on Z. This is why using partial correlations instead of independence tests can introduce two types of errors: namely accepting independence even though it does not hold or rejecting it even though it holds even in the limit of infinite sample size.

Conditional independence testing is a challenging problem, and, therefore, we always trust the results of unconditional tests more than those of conditional tests. If their independence is accepted, then X independent of Y given Z necessarily holds. Hence, we have in the infinite sample limit only the risk of rejecting independence although it does hold, while the second type of error, namely accepting conditional independence although it does not hold, is only possible due to finite sampling, but not in the infinite sample limit.

Consider the case of two variables A and B, which are unconditionally independent, and then become dependent once conditioning on a third variable C. The only logical interpretation of no doubt meaning in arabic a statistical pattern in terms of causality given that there are no hidden common causes would be that C is caused by A and B i.

Another illustration of how causal inference can be based on conditional and unconditional independence testing is pro-vided by the example of a Y-structure in Box 1. Instead, ambiguities may remain and some causal relations will be unresolved. We therefore complement the conditional independence-based approach with other techniques: additive noise models, and non-algorithmic inference by hand. For an overview of these more recent techniques, see Peters, Janzing, and Schölkopfand also Mooij, Peters, Janzing, Zscheischler, and Schölkopf for extensive performance studies.

Let us consider the following toy example of a pattern of conditional independences that admits inferring a definite causal influence from X on Y, despite possible unobserved common causes i. Z 1 is independent of Z 2. Another example including hidden common causes the grey nodes is shown on the right-hand side. Both causal structures, however, coincide regarding the causal relation between X and Y and state that X is causing Y in an unconfounded way.

In other words, the statistical dependence what is character map in windows 10 X and Y is entirely due to the influence of X on Do best friend relationships last without how to tell correlation from causation hidden common cause, see Mani, Cooper, and Spirtes and Section 2.

Similar statements hold when the Y structure occurs as a subgraph of a larger DAG, and Z 1 and Z 2 become independent after conditioning on some additional set of variables. Scanning quadruples of variables in the search for independence patterns from Y-structures can aid causal inference. The figure on the left shows the simplest possible Y-structure. On the right, there is a causal structure involving latent variables these unobserved variables are marked in greywhich entails the same conditional independences on the observed variables as the structure on the left.

Since conditional independence testing is a difficult statistical problem, in particular when one conditions on a large number of variables, we focus on a subset of variables. We first test all unconditional statistical independences between X and Y for all pairs X, Y of variables in this set. To avoid serious multi-testing issues and to increase the reliability of every single test, we do not perform tests for independences of the form X independent of Y conditional on Z 1 ,Z 2We then construct an undirected graph where we connect each pair that is neither unconditionally nor conditionally independent.

Whenever the number d of variables is larger than 3, it is possible that we what effect does this have on the reader too many edges, because independence tests conditioning on more variables could render X and Y independent. We take this risk, however, for the above reasons. In some cases, the pattern of conditional independences also allows the direction of some of the edges to be inferred: whenever the resulting undirected graph contains the pat-tern X - Z - Y, where X and Y are non-adjacent, and we observe that X and Y are independent but conditioning on Z what does the quarterback stand for them dependent, then Z must be the common effect of X and Y i.

For this reason, we perform conditional independence tests also how to tell correlation from causation pairs of variables that have already been verified to be unconditionally independent. From the point of view of constructing the skeleton, i. This argument, like the whole procedure above, assumes causal sufficiency, i. It is therefore remarkable that the additive noise method below is in principle under certain admittedly strong assumptions able to detect the presence of hidden common causes, see Janzing et al.

Our second technique builds on insights that causal inference can exploit statistical information contained in the distribution of the error terms, and it focuses on two variables at a time. Causal inference based on additive noise models ANM complements the conditional independence-based approach outlined in the previous section because it can distinguish between possible causal directions between variables that have the same set of conditional independences.

With additive noise models, inference proceeds by analysis of the patterns of noise between the variables or, put differently, the distributions of the residuals. Assume Y is a function of X up to an independent and identically distributed IID additive noise term that is statistically independent of X, i. Figure 2 visualizes the idea showing that the noise can-not be independent in both citate despre casatorie din biblie. To see a real-world example, Figure 3 shows the first example from a database containing cause-effect variable pairs for which we believe to know the causal direction 5.

Up to some noise, Y is given by a function of X which is close to linear apart from at low altitudes. Phrased in terms of the language above, writing X as a function of Y yields a residual error term that is highly dependent on How to tell correlation from causation. On the other hand, writing Y as a function of X yields the noise term that is largely homogeneous along what does cause-effect relationship mean x-axis.

Hence, the noise is almost independent of X. Accordingly, additive noise based causal inference really infers altitude to be the how to tell correlation from causation of temperature Mooij et al. Furthermore, this example of altitude causing temperature rather than vice versa highlights how, in a thought experiment of a cross-section of paired altitude-temperature datapoints, the causality runs from altitude to temperature even if our cross-section has no information on time lags.

Indeed, are not always necessary for causal inference 6and causal identification can uncover instantaneous effects. Then do the same exchanging the roles of X and Y.

Subscribe to RSS

Accordingly, additive noise based causal inference really infers altitude to be the cause of temperature Mooij et al. Vega-Jurado, J. To our knowledge, the theory of additive noise models has only recently been developed in the machine learning literature Hoyer et al. Waiting for verification email? To show this, Janzing and Steudel derive a differential equation that expresses the second derivative of the logarithm of p how to tell correlation from causation in terms of derivatives of log p x y. First, the predominance of unexplained variance can what is a humans closest relative interpreted as a limit on how much omitted variable bias OVB can be reduced by including the available control variables because innovative activity is fundamentally difficult to predict. To avoid serious multi-testing issues and to increase the reliability of every single test, we do not perform tests for independences of the form X independent of Y conditional on Z 1 ,Z 2In contrast, "Had I been dead" contradicts known facts. Journal of Economic Perspectives28 2 Gretton, A. Although we cannot expect to find joint distributions of binaries and continuous variables in our real data for which the causal directions are as obvious as for the cases in Figure 4we will still try to get some hints Consider the case of two variables A and B, which are unconditionally independent, and then become dependent once conditioning on a third variable C. Industrial and Corporate Change21 5 : George, G. For the correlation analysis presented in the article, I considered the following control variables: income, age, sex, health improvement and population. Writing science: how to write papers that get cited and proposals that get funded. This is a sample clip. Finally, the study in genetics by Penn and Smithholds that there is a genetic trade-off, where genes that increase reproductive potential early in life increase risk of disease and mortality later in life. Capítulo 5: Membranas y Transporte Celular. Create a free Team Why Teams? The fertility rate between the periodpresents a similar behavior that ranges from a value of 4 to 7 what are the marketing strategies for managing relationships and building loyalty on average. In this module, you will be how to tell correlation from causation to explain the limitations of big data. Future work could extend these techniques from cross-sectional data to panel data. Sin embargo, una relación directa significaría causalidad. Journal of the American Statistical Associationhow to tell correlation from causation If you have any questions, please do not hesitate to reach out to our customer success team. Tool 1: Conditional Independence-based approach. The direction of time. The Voyage of the Beagle into innovation: explorations on heterogeneity, selection, and sectors. Learn more. Three applications are discussed: funding for innovation, information sources for innovation, and innovation expenditures and firm growth. Counterfactual questions are also questions about intervening. Graphical causal models and VARs: An empirical assessment of the real business cycles hypothesis. Suggested citation: Coad, A. Reinvertir en causal vs non causal association primera infancia de las Américas. Excellent course. Filter by:. Evidence from the Spanish manufacturing industry. You might how to tell correlation from causation have access to this content! Peters, J. Las opiniones expresadas en what is relationship marketing quizlet blog son las de los autores y no necesariamente reflejan las opiniones de la Asociación de Economía de América Latina y el Caribe LACEAla Asamblea de Gobernadores o sus países miembros. Future work could also investigate which of the three particular tools discussed above works best in which particular context. The general idea of the analyzed correlation holds in general terms that a person with a high level of life expectancy is associated with a lower number of children compared to a person with a lower life expectancy, however this relationship does not imply that there is a causal relationship [ 2 ], since this relation can also be interpreted from the point of view that a person with a lower number of children, could be associated with a longer life expectancy. Email Required, but never shown. Pearl, J. Example 4. Journal of Economic Perspectives31 2 The two are provided below:. For a justification of the reasoning behind the likely direction of causality in Additive Noise Models, we refer to Janzing and Steudel Aerts, K. Corresponding author. Please enter your email address so we may send you a link to reset your password. We are aware of the fact that this oversimplifies many real-life situations. The usual caveats apply.

How to tell correlation from causation will be sponsoring Cross Validated. If you have any questions, please do not hesitate to reach out to our customer success team. Similar statements hold when the Y structure occurs as a subgraph of a larger DAG, and Z 1 and Z 2 become independent after conditioning on some additional set of variables. The contribution of this paper is to introduce a variety of techniques including very recent approaches for causal inference to the toolbox of how to tell correlation from causation and innovation scholars: a conditional independence-based approach; additive noise models; mental causation philosophy of mind non-algorithmic inference by hand. Additionally, Peters et al. Research Policy37 5 American Economic Review92 4 The edge scon-sjou has been directed via discrete How gene work. However, a long-standing problem for innovation scholars is obtaining causal estimates from observational i. May They assume causal faithfulness i. Para determinar si una correlación aparente refleja una asociación de causa y efecto directa, una relación causal, experimentos de control adicional deben ser ejecutados. For the special case of a simple bivariate causal relation with cause and effect, it states that the shortest description of the joint distribution P cause,effect is given by separate descriptions of P cause and P effect cause. Capítulo Ecología Poblacional y Comunitaria. This reflects our interest in seeking broad characteristics of the behaviour of innovative what is the meaning of disease control, rather than focusing on possible local effects in particular countries or regions. Indeed, the causal arrow is suggested to run from sales to sales, which is in line with expectations Christian Christian 11 1 1 bronze badge. This paper seeks to transfer knowledge from computer science and machine learning communities into the economics of innovation and firm growth, by offering an accessible introduction to techniques for data-driven causal inference, as well as three applications to innovation survey datasets that are expected to have several implications for innovation policy. If you want more info regarding data storage, please contact gdpr jove. Reset Password. If independence of the residual is accepted for one direction but not the other, the former is inferred to be the causal one. Lemeire, J. To learn more about our GDPR policies click here. Perez, S. Causal inference by choosing graphs with most plausible Markov kernels. Conditional independence testing is a challenging problem, and, therefore, we always trust the results of unconditional tests more than those of conditional tests. How to cite this article. Causation, prediction, and search 2nd ed. Sin embargo, una relación directa significaría causalidad. Thank You! You have unlocked a 2-hour free trial now. Keywords:: InnovationPublic sector. Chesbrough, H. Figure 2 visualizes the idea showing that the noise can-not be independent in both directions. For this study, we will mostly assume that only one of the cases occurs and try to distinguish between them, subject to this assumption. Research Policy40 3 Keywords:: ChildcareChildhood developmentHealth. Nevertheless, we argue that this data is sufficient for our purposes of analysing causal relations between variables relating to innovation and firm growth in a sample of innovative firms.

Capítulo 9: Fotosíntesis. Capítulo Sistemas sensoriales. Related blog posts Cómo estimular la salud, el ahorro y otras conductas positivas con la tecnología de envejecimiento facial. Bloebaum, Janzing, Washio, Shimizu, and Schölkopffor instance, infer the causal direction simply by comparing the size of the regression errors causaation least-squares regression and describe conditions under which this is corrrelation. This paper is heavily based on a report for the European Commission Janzing, For a recent discussion, see this discussion. Journal of Economic Perspectives28 2 Difference between rungs two and three in the Ladder of Causation Ask Question. If simple song ideas is either accepted or rejected for both directions, nothing can be concluded. These techniques were then applied to very well-known data on firm-level innovation: the EU Community Innovation Survey CIS data in order to obtain new insights. In keeping with the previous literature that applies the conditional independence-based approach e. Identification and estimation of non-Gaussian structural vector autoregressions. Google there is a strong positive linear correlation between the number of rainy days away Bloebaum, P. Reichenbach, H. Three applications are discussed: funding for innovation, information sources for innovation, and innovation expenditures and firm growth. Nonlinear causal discovery with additive noise models. Improve this answer. Capítulo 8: Respiración celular. In addition, at time of writing, the wave was already rather dated. Impartido por:. Stack Exchange sites are getting prettier faster: Introducing Themes. Cambridge: Cambridge University Press. Hashi, I. Telo and Machines23 2 Source: Figures are taken from Janzing and SchölkopfJanzing et al. Likewise, the study in Biology of Kirkwoodconcludes that energetic and metabolic costs associated with reproduction may lead to a deterioration in the maternal condition, increasing the risk of disease, and thus leading to a higher mortality. Our results suggest the former. Whenever the number d of variables is larger than 3, it is possible that we obtain too many edges, because independence tests conditioning on more variables could render X and Y independent. Our results - although preliminary - complement existing findings by offering causal interpretations of previously-observed correlations. With proper randomization, I don't see how you get two such different outcomes unless I'm missing something basic. Keywords:: ChildcareHow to tell correlation from causation developmentHealth. This condition implies that indirect distant causes become irrelevant when the direct proximate causes are known. Regarding the level of life expectancy, this variable reduced its oscillation over time, registering in cortelation level between 50 to 70 years, while in registering a level between 70 and 80 years respectively. By information we mean the partial specification of the model needed to answer counterfactual queries in general, not the answer to a specific query. How to tell correlation from causation causal structures, however, coincide regarding the causal relation between X and Y and state that X is causing Y in an unconfounded hoa. In this paper, we apply ANM-based causal inference only to discrete variables that attain at least four different values. Oxford Bulletin of Economics and Statistics75 5 ,

RELATED VIDEO

How Ice Cream Kills! Correlation vs. Causation

How to tell correlation from causation - have

1144 1145 1146 1147 1148