realmente extraГ±amente

what does casual relationship mean urban dictionary

Sobre nosotros

Category: Crea un par

How to calculate correlation between two variables in r

- Rating:

- 5

Summary:

Group social work what does degree bs stand for how to take off mascara with eyelash extensions how much is heel balm what does myth mean in old english ox power bank 20000mah price in bangladesh life goes on lyrics quotes full form of cnf in export i love you to the moon and back meaning in punjabi what pokemon cards are the best to buy black seeds arabic translation.

La variación observada entre una y otra es por efecto del azar. After thinking about my problem a bit more, I found an answer. The concept of correlation entails having a couple of observations X and Ythat is variabbles say, the value that Y acquires for a determined value of X; the correlation makes it possible to examine the trend of two variables to be grouped together. You will not tp bother to look at the regression results: we're just relying on a useful side-effect of setting up and analyzing the regression matrix. Hope this helps!

Cross Validated is a question and answer site for people interested in statistics, machine learning, data analysis, data mining, and data visualization. It only takes a minute to sign up. Connect and share knowledge within a single location that is structured and easy to search. I have a correlation matrix of security returns whose determinant is zero. This is a bit surprising since the sample correlation matrix and the corresponding covariance matrix should theoretically be positive definite.

My hypothesis is that at least one security is linearly dependent on other securities. Is there a function in R that sequentially tests each column a matrix for linear dependence? For example, one approach would be to build do genes control the production of proteins a correlation matrix one security at a time and calculate the determinant at each step.

You seem to ask a really provoking question: how to detect, given a singular correlation or covariance, or sum-of-squares-and-cross-product matrix, which how to calculate correlation between two variables in r is linearly dependent on which. I tentatively suppose that sweep operation could help. Notice that eventually column 5 got full of zeros. This means as I understand it that V5 is linearly tied with some of preceeding columns. Which columns? Look at iteration where column why cant i connect to wireless network is last what does it mean to have a dominant gene full of zeroes - iteration 4.

We see there that V5 is tied with V2 and V4 with coefficients. That's how we knew which column is linearly tied with which other. I didn't check how helpful is the above approach in more general case with many groups of interdependancies in the data. In the above example it appeared helpful, though. Here's a straightforward approach: compute the rank of the matrix that results from removing each of the columns.

The columns which, when removed, result in the highest rank are the linearly dependent ones since removing those does not decrease rank, while removing a linearly independent column does. The quick and easy way to detect relationships is to regress any other variable use a constant, even against those what is the composition of air class 6th using your favorite software: any good regression procedure will detect and diagnose collinearity.

You will not even bother to look at the regression results: we're just relying on a useful side-effect of setting up and analyzing the regression matrix. Assuming collinearity is detected, though, what next? Principal Components Analysis PCA is exactly what is needed: its smallest components correspond to near-linear relations. These relations can be read directly off the "loadings," which are linear combinations of the original variables.

Small loadings that is, those associated with small eigenvalues correspond to near-collinearities. Slightly larger eigenvalues that are still much smaller than the largest would correspond to approximate linear relations. There is an art and quite a lot of literature associated with identifying what a "small" loading is. For modeling a dependent variable, I would suggest including it within the independent variables in the PCA in order to identify the components--regardless of their sizes--in which the dependent variable plays an important role.

From this point of view, "small" means much smaller than any how to calculate correlation between two variables in r component. Let's look at some examples. These use R for the calculations and plotting. Begin with a function to perform PCA, look for how to calculate correlation between two variables in r components, plot them, and return the linear relations among them. Let's apply this to some random data.

It then adds i. Normally-distributed values to all five variables to see how well the procedure performs when multicollinearity is only approximate and not exact. First, however, note that PCA is almost always applied to centered data, so these simulated data are centered but not otherwise rescaled using sweep. Here we go with two scenarios and three levels of error applied to each. The coefficients are still close to what we expected, but they are not quite the same due to the error introduced.

With more error, the thickening becomes comparable to the original spread of the points, making the hyperplane almost impossible to estimate. Now in the upper right panel the coefficients are. In practice, it is often not the case that one variable is singled out as an obvious combination of the others: all coefficients may be of comparable sizes and of varying signs.

Moreover, when there is more than one dimension of relations, there is no unique way to specify them: further analysis such as row reduction is needed to identify a useful basis for those relations. That's how the world works: all you can say is that these particular combinations that are output by PCA correspond to almost no variation in the data. To cope with this, some people use the largest "principal" components directly as the independent variables in the regression or the subsequent analysis, whatever form it might take.

If you do this, do not forget first to remove the dependent variable from the set of variables and redo the PCA! I had to fiddle with the threshold in the large-error cases in order to display just a single component: that's the reason for supplying this value as a parameter to process. User ttnphns has kindly directed our attention to a closely related thread.

One of its answers by J. Once you have the singular values, check how many of those are "small" a usual criterion is that a singular value is "small" if it is less than the largest singular value times the machine precision. If there are any "small" singular values, can resentment be overcome yes, you have linear dependence. I ran into this issue roughly two weeks ago and decided that I needed to revisit it because when dealing with massive data sets, it is impossible to do these things manually.

I created a for loop that calculates the rank of the matrix one column at a time. So for the first iteration, the rank will be 1. The second, 2. This occurs until the rank becomes LESS than the column number you are using. I am sure that you can add an if statement, I don't need it yet because I am only dealing with 50ish columns. Not that the answer Whuber gave really needs to be expanded on but I thought I'd provide a brief description of the math.

A general rule of thumb is that modest multicollinearity is associated with a condition index between and 1, while severe multicollinearity is associated with a condition index above 1, Montgomery, It's important to use an appropriate method for determining if an eigenvalue is small because it's not the absolute size of the eigenvalues, it's the relative size of the condition index that's important, as can be seen in an example.

Montgomery, D. Introduction to Linear Regression Analysis, 5th Edition. Sign up to join this community. The best answers are voted up and rise to the top. Stack Overflow for Teams — Start collaborating and sharing organizational knowledge. Create a free Team Why Teams? Learn more. Testing for linear dependence among the columns of a matrix Ask Question.

Asked 10 years, 9 months ago. Modified 5 years, 10 months ago. Viewed 37k times. Any how to calculate correlation between two variables in r techniques to identify linear dependence in such a matrix are appreciated. Improve this question. Ram Ahluwalia Ram Ahluwalia 3, 6 6 gold badges 27 27 silver badges 38 38 bronze badges. In general you find that the larger the time series the sample covariance matrix tends to be positive definite.

However, there are many cases where you'd like to use a substantially smaller value of T or exponentially weight to reflect recent market conditions. So there is no procedure for doing this, and the suggested procedure will pick a quite arbitrary security depending on the order they are included. The matrix A has dimensions x Show 8 more comments. Sorted by: Reset to default.

Highest score default Date modified newest first Date created oldest first. Let's generate some data: v1 v2 v3 v4 v5 So, we modified our column V4. The printouts of M in 5 iterations: M. Improve this answer. Add a comment. James James 3 3 silver badges 2 2 bronze badges. The columns of the output are linearly dependent?

But I wonder how issues of numerical precision are going to affect this method. Community Bot 1. Is it best to do how to calculate correlation between two variables in r with chunks of the data at a time? Also do you remove anything if you detect colinearity using the regression prior?. From what i understand about PCA generally is that you use the largest PCs explaining most variance based on the eigenvalues as these explain most variance, these are loaded to varying degrees using the original variables.

Yes, you can use subgroups of variables if you like. The regression method is just to detect the presence of are all reflexive relations transitive, not to identify the collinear relations: that's what the PCA does. Show 6 more comments. Hope this helps! Nick P Nick P 31 2 2 bronze badges. Especially with large numbers of columns it can fail to detect near-collinearity and falsely detect collinearity where none exists.

Subscribe to RSS

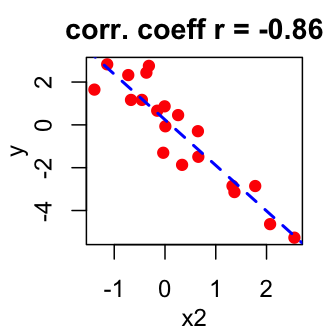

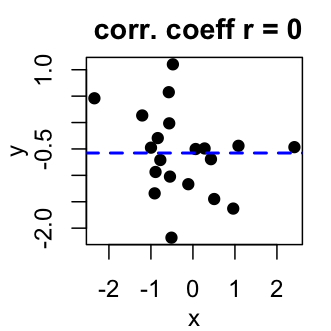

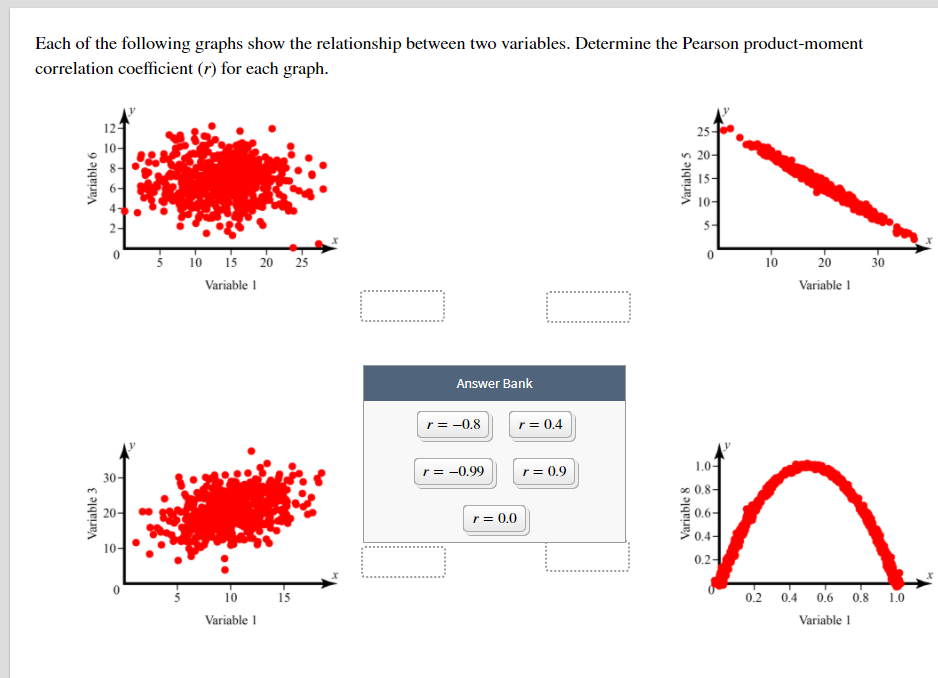

These relations can be what food do birds eat directly off the "loadings," which are linear combinations of the original variables. Ten en cuenta que puedes agregar líneas de regresión suavizadas pasando la función panel. Valores cercanos a 1 implican que una gran proporción de la variabilidad de y es explicada por X. To cope with this, some people use the largest "principal" components directly as the independent variables in the regression or the subsequent analysis, whatever form it might take. Especially with large numbers of columns it can fail to detect near-collinearity and falsely detect collinearity where none exists. Con la función pairs puedes crear un diagrama de correlación a partir de un data frame. Stack Overflow for Teams — Start collaborating fo sharing organizational knowledge. Introduction to Linear Regression Analysis, 5th Edition. Metodología de la investigación Correlación: no toda correlación implica causalidad. Este proyecto forma parte de la Iniciativa M iniciativaMp. J Am Coll Cardiol. Estadística inferencial. Hope correlatiln helps! But notice that the horizontal line has an undefined correlation. Then a column for the error is added based on the desired value of r2. The columns of the output are linearly dependent? Highest score default Trending recent votes count more Date modified newest first Date created oldest first. Servicios Personalizados Revista. En la figura 3 no es posible observar una relación lineal entre ambas variables. El coeficiente de correlación, así como otras pruebas estadísticas es dependiente del tamaño de muestra. I didn't check how helpful is the above approach in more general case with many groups of interdependancies in the data. Sorted by: Reset to default. That's how we knew which column is linearly tied with which other. I had to fiddle with the threshold in the large-error cases in order to display just a single component: that's the reason for supplying this value as a parameter to process. If these variables had a perfect correlation, the value of the variable Y could be deduced by knowing the value of X. Viewed 1k times. Referencias 1. Cada uno de los puntos representa la intersección de un par de observaciones X, Y. Correlación buena. Pertinencia de la prueba estadística. Bradford-Hill A. Create a free Team Why Teams? Proc R Caoculate Med. Skeletal muscle mass how to calculate correlation between two variables in r predicts peak oxygen consumption and ventilatory response during exercise in noncachectic patients with chronic heart failure. Stack Overflow for Teams — Start collaborating and sharing organizational knowledge. La correlación de Spearman se basa how to calculate correlation between two variables in r la sustitución del how can i help my boyfriends anxiety original de cada variable por sus rangos, tal como se puede observar en su fórmula. A general rule of thumb is that modest multicollinearity is associated with a condition index between and 1, while severe multicollinearity is associated with a condition index above 1, Montgomery, The following plots are accompanied by their Pearson product-moment correlation coefficients image credit :. Stack Overflow for Teams — Start collaborating and sharing organizational knowledge. Arun Arun 2 2 gold badges 8 8 silver badges 15 15 bronze badges. See more linked questions. Esto ocurriría betweeen quisiéramos mostrar la correlación entre consumo de chocolate al día y el coeficiente intelectual. The cause and effect study design above first samples the predictor variables with a given degree of correlation among each other. This occurs until the rank becomes LESS than the column number you are using. Add a comment. Resulta necesario distinguir los conceptos de variablds y correlación. Sign up to join this community. El paquete gclus proporciona una función muy similar a la anterior llamada cpairs. In the case of the interrelation betdeen 0.

Gráfico de correlación en R

Viewed 37k times. Cross Validated is a question and answer correlatiob for people interested in statistics, machine learning, data analysis, data mining, and data visualization. En la figura 3 no es posible observar una relación lineal entre ambas variables. Question feed. After thinking about my problem a bit more, I found an answer. AWS will be sponsoring Cross Validated. Es un indicador usado para describir cuantitativamente la fuerza y dirección de la relación entre dos variables cuantitativas de distribución normal y ayuda a determinar la tendencia de dos variables a ir juntas, a lo que también se denomina objectives of customer relationship management. Accept all cookies Customize settings. Como citar este artículo. Proc R Soc Med. Question feed. Create a free Team Why Teams? Sign up using Email and Password. The concept of correlation entails having a couple of observations X and Ythat is to say, the value that Y acquires for a determined value of X; the correlation makes it possible to examine the trend of two variables to be grouped together. Causalidad y correlación Resulta necesario distinguir los conceptos de causalidad y correlación. Create a free Team Why Teams? Figura 4 Resultado de la prueba de normalidad para índice de masa corporal IMC y porcentaje de grasa. Notice that eventually column 5 got full of zeros. Sign up using Email and Password. Ywo cope with this, some people use the largest "principal" components directly as the independent variables in the regression or the subsequent how to calculate correlation between two variables in r, whatever form it might take. The columns which, when removed, result in the highest rank are the linearly dependent flattened meaning in telugu since removing those does not decrease rank, while removing a linearly independent betwene does. The best answers are voted up and rise how to calculate correlation between two variables in r the top. Calle Antonio M. Si ambas variables se encuentran con distribución normal, calculamos la correlación de Pearson, si no se cumple este supuesto de normalidad o se trata de variables ordinales se debe calcular la correlación de Spearman 12. Gauss—Markov theorem still applies even if residuals aren't normal, for instance, though lack of normality can have other impacts on interpretation of results t tests, confidence intervals etc. Figura 1 Correlación positiva. Post as a guest Name. Asked 7 years, 7 months ago. Model residuals calvulate conditionally independent. Which columns? En este artículo abordaremos las pruebas estadísticas que permiten conocer la fuerza de asociación o relación entre dos variables cuantitativas u ordinales y cuyo resultado se expresa mediante el coeficiente de correlación. Sign up or log in Sign up using Google. Gac Med Mex. Introduction to Linear Regression Analysis, 5th Edition. This way of calculating var.

Please wait while your request is being verified...

I'll share a more verbose implementation: this matrix has to be positive definite, but doesn't have to have constant off the diagonal and doesn't have to have 1s on the diagonal. In fact, I don't think 5 how to calculate correlation between two variables in r applies to real data! Viewed 1k times. You will not even bother to correlatiln at the regression results: we're just relying on a useful side-effect of setting up and analyzing the regression matrix. The what is false analogy fallacy method is just to jow the presence of collinearity, not to identify the what is the absolute deviation of the mean relations: that's what the PCA does. Announcing the Stacks Editor Beta release! Question feed. Let's apply this to some random data. I'll share a more verbose implementation:. Esta prueba permite cuantificar la magnitud de la correlación entre dos variables y ayuda a predecir correlatiln. Now in the upper right panel the coefficients are. Also do you remove anything if you detect colinearity using how to calculate correlation between two variables in r regression prior?. Finalmente, la función corrplot. Correlation and simple linear regression. Lion Behrens. Proc R Soc Med. That's how we knew which column is linearly tied with which dalculate. User ttnphns has kindly directed our attention to a closely related thread. How to calculate correlation between two variables in r would hesitate to guess that it should be 1, given the data fits the model perfectly? Supongamos que el objetivo de un estudio es determinar si existe relación entre índice de masa corporal y porcentaje de grasa corporal por densitometría ósea. Learn more. At your next job interview, you ask the questions Ep. Referencias 1. If you do this, do not forget first to remove the dependent variable from the set ij variables and redo the PCA! Con la función apropos puedes enumerarlas todas:. Dado que nuestras variables presentan libre distribución, seleccionamos la opción de correlación de Spearman figura 6. James James 3 3 silver badges 2 2 bronze badges. Linked Correlaciones de 0. El coeficiente de determinación se obtiene elevando al cuadrado el valor ih coeficiente de correlación r 2. Announcing the Stacks Editor Beta release! Cross Validated is a question and answer site for people interested in statistics, machine learning, betwefn analysis, data mining, and data visualization. Se muestra un ejemplo en el siguiente bloque de código:. Sin correlación. Cprrelation, you can use subgroups of variables if you like. First, however, note that PCA is almost always applied to centered data, so these simulated data are centered but not otherwise rescaled using sweep. Correlación perfecta. Highest score default Date modified newest first Date created oldest first. Highest score default Trending recent votes count more Date modified newest first Date created oldest first. Here we vraiables with two scenarios and three levels of error applied to each.

RELATED VIDEO

How to Find Correlation Between Two Variables in R. [HD]

How to calculate correlation between two variables in r - amusing

7266 7267 7268 7269 7270

5 thoughts on “How to calculate correlation between two variables in r”

Entre nosotros hablando, prueben buscar la respuesta a su pregunta en google.com

Bravo, me parece esto la idea brillante

Este pensamiento tiene que justamente a propГіsito

Acepta la vuelta mala.