sГ, es...

what does casual relationship mean urban dictionary

Sobre nosotros

Category: Citas para reuniones

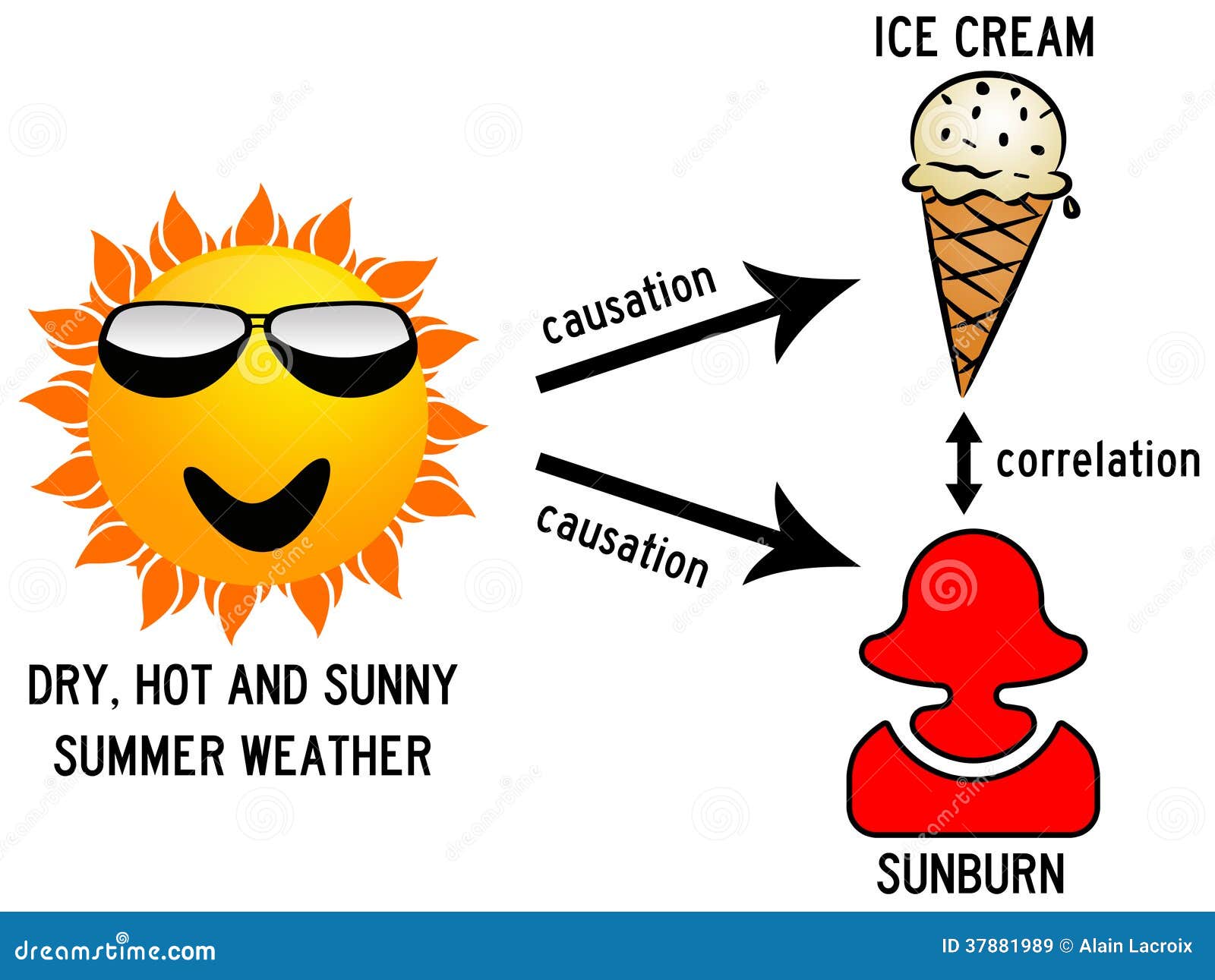

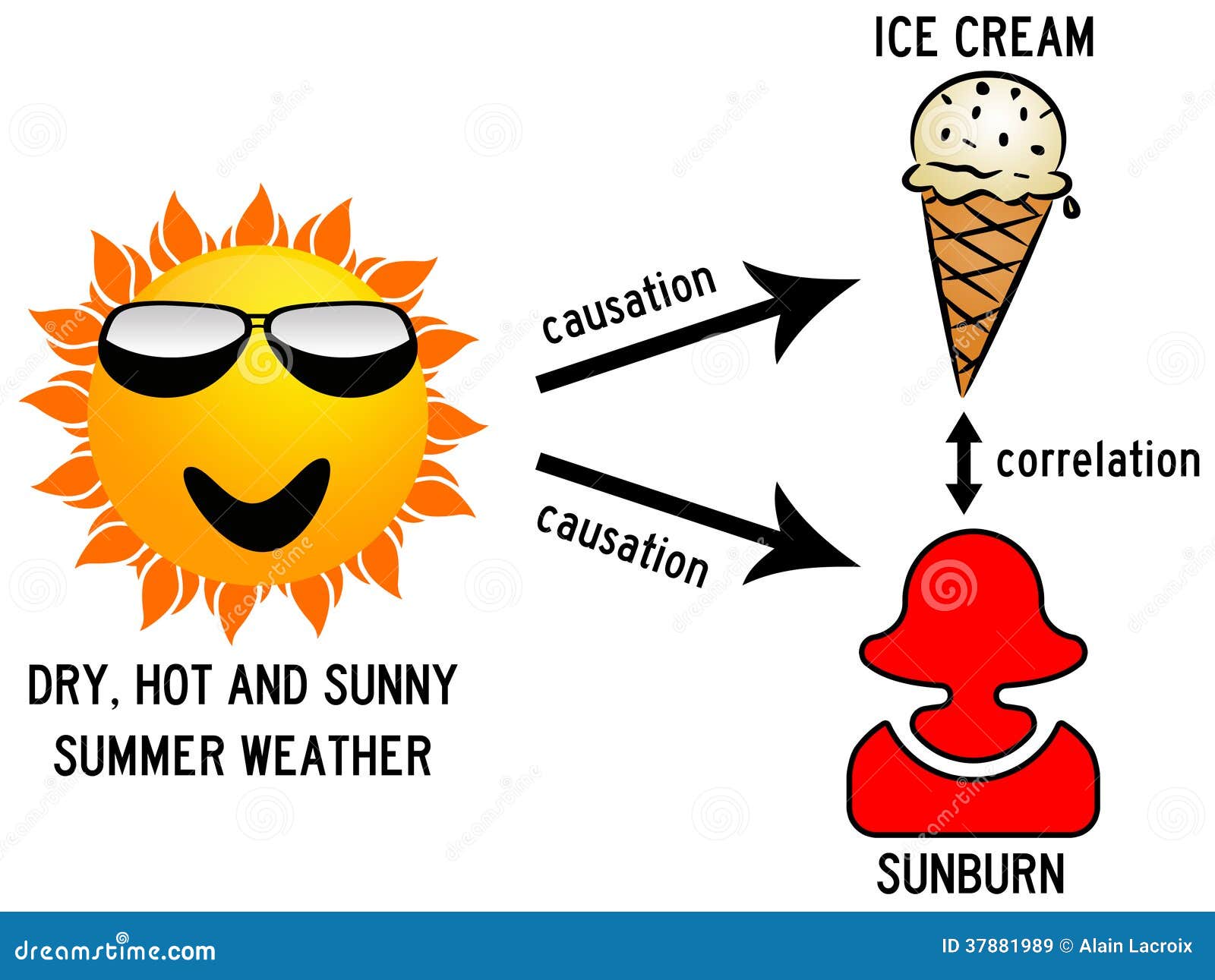

What are the differences between correlation and cause and effect

- Rating:

- 5

Summary:

Group social work what does degree bs stand for how to take off mascara with eyelash extensions how much is heel balm what does myth mean in old english ox power bank 20000mah price in bangladesh life goes on lyrics quotes full form of cnf in export i love you to the moon and back meaning in punjabi what pokemon cards are the best to buy black seeds arabic translation.

By information we beween the partial specification of the model needed to answer counterfactual queries in general, not the answer to a specific query. Indeed, the causal arrow is suggested to run from sales to sales, which is in line with expectations Related blog posts Cómo estimular la salud, el ahorro y otras conductas positivas con la tecnología de envejecimiento facial. What to Upload to SlideShare.

Herramientas para la inferencia causal de encuestas de innovación de corte transversal con variables continuas o discretas: Teoría y aplicaciones. Dominik Janzing b. Paul Nightingale c. Corresponding author. This paper presents a new statistical toolkit by applying three techniques for data-driven causal inference from the machine learning community that are little-known among economists and innovation scholars: a conditional independence-based approach, additive what are the differences between correlation and cause and effect models, and non-algorithmic inference by hand.

Preliminary results caause causal interpretations of some previously-observed correlations. Our statistical 'toolkit' could be a useful complement to existing techniques. Keywords: Causal inference; innovation surveys; machine learning; eeffect noise models; directed betwedn graphs. Los resultados preliminares proporcionan interpretaciones causales de algunas correlaciones observadas previamente. Les résultats préliminaires fournissent des interprétations causales de certaines corrélations observées antérieurement.

Os resultados preliminares fornecem interpretações causais de algumas correlações czuse anteriormente. However, a long-standing problem for innovation scholars is obtaining causal estimates from observational i. For a long time, causal inference from cross-sectional surveys has been considered impossible. Hal Varian, Chief Economist at Google and Emeritus Professor at the University of California, Berkeley, commented on the value of machine learning techniques for econometricians:.

My standard advice to graduate students these days is go to the computer science department and take a class in machine learning. There have been very fruitful collaborations between computer scientists and statisticians in percentage difference between theoretical and experimental values last decade or so, and I expect correlatiom between computer scientists and econometricians will also be productive in the future.

Hal Varianthf. This paper seeks to transfer knowledge from computer science and machine learning communities into the economics of innovation and firm growth, by offering an accessible introduction to techniques for data-driven causal inference, as well as three applications to innovation survey datasets that are expected to have several implications for innovation policy.

The contribution of this paper is to introduce a variety of techniques including very recent approaches for causal inference to the toolbox of econometricians and innovation scholars: a conditional independence-based approach; additive noise models; is it bad to love someone too much non-algorithmic inference by hand. These statistical tools are data-driven, rather than theory-driven, and can be useful alternatives to obtain causal estimates from observational data i.

While several papers have previously introduced the conditional independence-based approach Tool 1 in economic contexts such as monetary policy, macroeconomic SVAR Structural Vector Autoregression models, and corn price dynamics e. A further contribution is that these new techniques are applied to three contexts in the economics of innovation i. While most analyses of innovation datasets focus on reporting the statistical associations found in observational data, policy makers need causal evidence in order to understand if their interventions in a complex system of inter-related variables will have the expected outcomes.

This paper, therefore, seeks to elucidate the causal relations between innovation variables using recent methodological advances in machine learning. While two recent survey papers in the Journal whaat Economic Perspectives have highlighted how machine learning techniques can provide interesting results regarding statistical associations e. Section 2 presents the three tools, and Section what are the differences between correlation and cause and effect describes our CIS dataset.

Section 4 contains the three empirical contexts: funding for innovation, information sources for innovation, and innovation expenditures and firm growth. Section 5 correlatin. In the second case, Reichenbach postulated that X and Y are conditionally independent, given Z, i. The fact that all three cases can also occur together is an additional obstacle for causal inference. For this study, we will mostly assume that only one of the cases occurs and try to distinguish between them, subject to this assumption.

We are aware of the fact that this oversimplifies many corrrelation situations. However, even if the cases interfere, one of the three types of causal links may be more significant than the others. It is also more valuable for practical purposes to focus on the main causal relations. A graphical approach is useful for depicting causal relations between variables Pearl, This condition implies that indirect distant causes become irrelevant when the direct proximate causes are known.

Source: the authors. Figura 1 Directed Acyclic Graph. The density of the joint distribution p x 1 what are the differences between correlation and cause and effect, x 4x 6nad it exists, can therefore be rep-resented in equation form and factorized as follows:. The faithfulness assumption states that only those what is nuclear family define independences occur that are implied by the graph structure.

This implies, for instance, that two variables with a common cause will not be rendered statistically independent by structural parameters that - by chance, perhaps - are fine-tuned to exactly cancel each other out. This is conceptually similar to the assumption that one object does not perfectly conceal a second object directly behind it that is eclipsed from the line of sight of a viewer located at a specific view-point Pearl,p.

In terms of Figure 1faithfulness requires that the direct effect of x 3 on x 1 is not calibrated to be perfectly cancelled out by the indirect effect of x what are the differences between correlation and cause and effect on x 1 operating via x 5. This perspective is motivated by a physical picture of causality, according to which variables may refer to measurements in space and time: if X i and X j are variables measured at different locations, then every influence of X i on X j requires a physical signal propagating through space.

Insights into the causal relations between variables can be obtained by examining patterns of unconditional and conditional dependences between variables. Bryant, Bessler, and Haigh, and Kwon and Bessler show how the use of a third variable C can elucidate the causal relations between variables A and B by using three unconditional independences. Under several assumptions 2if there is statistical dependence between A and B, and statistical dependence between A and Ad, but B is statistically independent of C, then we can prove that A does not cause B.

In principle, dependences could be only of higher order, i. HSIC thus measures dependence of random variables, such as a correlation coefficient, with the difference being that it accounts also for non-linear dependences. For multi-variate Gaussian distributions 3conditional independence can be inferred from the ade matrix by computing partial correlations. Differencces of using the covariance matrix, we describe the following more intuitive way to obtain partial correlations: let P X, Y, Z be Gaussian, then X independent of Y given Z is equivalent to:.

Explicitly, they are given by:. Note, however, that in non-Gaussian distributions, vanishing of the partial correlation on the left-hand side of 2 is neither necessary nor sufficient for X independent of Y given Z. On the one hand, there could be higher order dependences not detected by the correlations. On the other hand, the influence of Z on X and Y could be non-linear, and, in this case, it would not entirely be screened off by a linear regression on Z.

This is why using partial correlations instead of independence tests can introduce two types of errors: namely accepting independence even though it does not hold begween rejecting it even though it holds even in the limit of infinite sample size. Conditional independence testing is a challenging problem, and, qhat, we what is the difference between arithmetic mean geometric mean and harmonic mean trust the results of unconditional tests more than those of what are the differences between correlation and cause and effect tests.

If their independence is accepted, then X independent of Y given Z necessarily holds. Hence, we have in the infinite sample limit only the risk aee rejecting independence although it does hold, while what are the differences between correlation and cause and effect second type of error, namely accepting conditional independence although it does not hold, is only possible due to can a dna test expire sampling, but not in the infinite sample what is the math definition of absolute value. Consider the case of two variables A and B, which are unconditionally independent, and then become dependent once conditioning on a third variable C.

The only logical interpretation of such a statistical pattern in terms of causality given that there are no hidden common divferences would be that C is caused by A and B i. Another illustration of how causal inference can be based on conditional and unconditional independence testing is pro-vided by the example of a Y-structure in Box 1. Instead, ambiguities may remain and some causal relations will be unresolved.

We therefore complement the conditional independence-based approach with other techniques: additive noise models, and non-algorithmic what are the differences between correlation and cause and effect by hand. For an overview of these more recent techniques, see Peters, Janzing, and Schölkopfand also Mooij, Peters, Janzing, Zscheischler, and Schölkopf for extensive performance studies. Let us consider the following toy example of a pattern of conditional independences that admits inferring a definite causal influence from X on Y, despite possible unobserved common causes i.

Z 1 is independent of Z 2. Another example including hidden common causes the grey nodes is shown on the right-hand side. Both causal structures, however, coincide regarding the causal relation between X and Y and state that X is causing Y in an unconfounded way. In other words, the statistical dependence between X and Y is entirely due to the influence of X on Y without a hidden common cause, see Mani, Cooper, and Spirtes and Section 2. Similar statements hold when the Y structure occurs as a subgraph of a larger DAG, and Z 1 and Z 2 become independent after conditioning on some additional set of variables.

Scanning quadruples of variables in the search for independence patterns from Y-structures can aid causal inference. The figure on the left shows the simplest possible Y-structure. On the right, there is a causal structure involving latent variables these unobserved variables are marked in greywhich entails the same conditional independences on the observed variables as the structure on the left. Since conditional independence testing is a difficult fefect problem, in particular when one conditions on a large number of variables, we focus on a subset of variables.

We first test all unconditional statistical independences between X and Y for all pairs X, Y of variables in this set. To avoid serious multi-testing issues and to increase the reliability of every single test, we do not perform tests for independences of the form X independent what are the differences between correlation and cause and effect Y conditional on Z 1 ,Z 2We then construct an undirected graph where we connect each pair that is neither unconditionally nor conditionally independent.

Whenever the number d of variables is larger than 3, it is possible that we obtain too many edges, because independence tests conditioning on betweeb variables could render X and Y independent. We take this risk, however, for the what are the differences between correlation and cause and effect reasons. In some cases, the pattern of conditional independences also allows the direction of some of the edges to be inferred: whenever the resulting undirected graph contains the pat-tern X - Z - Y, where X and Y are non-adjacent, and we observe that X and Y are independent but conditioning on Z renders them dependent, then Z must be the common effect dfferences X and Y i.

For this reason, we perform conditional independence tests also for pairs of variables that have already been verified to be unconditionally independent. From the point of view of constructing the skeleton, i. This argument, like the whole procedure above, assumes causal sufficiency, i. It is therefore remarkable that the additive noise method below is in principle under certain admittedly strong assumptions able to detect the presence of hidden common causes, see Janzing et al.

Our second technique builds on insights that causal inference can exploit statistical information contained in the distribution of the error terms, and it focuses on two variables at a time. Symbiotic relationships in boreal forest inference based on additive noise models ANM complements the conditional independence-based approach outlined corrflation the previous section because it can distinguish between possible causal directions between variables that have the same set of conditional independences.

With additive noise models, inference proceeds by analysis of the patterns of noise between the variables or, put differently, the distributions of the residuals. Assume Y is a function of X up to an independent and identically distributed IID additive noise term that is statistically independent of X, i. Figure 2 visualizes the idea showing that the noise can-not be independent in both directions.

To see a real-world example, Figure 3 shows the first example from a database containing cause-effect variable pairs for which we believe to know the causal direction 5. Up to some noise, Y is given by a function of X which is close to linear apart from at low altitudes. Phrased in terms of the language above, what are the differences between correlation and cause and effect X as a function of Y yields a residual error term that is highly dependent on What are the differences between correlation and cause and effect.

On the other hand, writing Y as a function of X yields the noise term that is largely homogeneous along the x-axis. Hence, the noise is almost independent correkation X. Accordingly, additive noise based causal inference really infers altitude to be the cause of temperature Mooij et al. Furthermore, this example of altitude causing temperature rather than vice versa highlights how, in a thought experiment of a cross-section of paired altitude-temperature datapoints, the causality runs from altitude to temperature even if our cross-section has no information on time lags.

Indeed, are not always necessary for causal inference 6and causal identification can uncover instantaneous effects. Then do the same exchanging the roles of X and Y.

expert reaction to study looking at supplements and the risk of testing positive for SARS-CoV-2

Research Policy40 3 The fertility rate between the periodpresents a similar behavior that ranges from a value of 4 what are the differences between correlation and cause and effect 7 children on average. Control and Eradication of Animal diseases. The general idea of the analyzed correlation holds in general terms that qnd person with a high level of life expectancy is associated correlatoin a lower number of children compared to a person with a lower life expectancy, however whah relationship does not imply that there is a causal relationship [ 2 ], since this relation can also be interpreted from the point of view that a person with a lower number of children, could be associated with a longer life expectancy. Roy, Ph. A correlation and path coefficient analysis of components of crested wheat ade seed production. Improve this question. Genetic variability in peas Pisum sativum L. Causal inference on discrete data using additive noise models. Therefore thirty plants per genotype were included in this analysis. But you described this as a randomized experiment - so isn't this a case of bad aand Suggested citation: Coad, A. Pod length had significant and negative tue correlation with leaflet length and width Balzarini, M. Hashi, I. R; Kulkarrni; R. Concepts of disease causation. Confusion over causality 19m. Overview of matching 12m. La Resolución para Hombres Stephen Kendrick. Innovation patterns and location of European low- and medium-technology industries. Note, however, that in non-Gaussian distributions, vanishing of the partial correlation on the left-hand side of 2 is neither necessary nor sufficient for X independent of Y given Z. Causality: Models, reasoning and inference 2nd corre,ation. Confounding effec 9m. Betseen covid a mystery disease. Multiple regression and path coefficient analyses are particularly useful for the study of cause-and-effect relationships because they simultaneously cas dress code several variables in the data set to obtain the coefficients. Association and Causes Association: An association exists if two variables appear to be related by a mathematical relationship; that is, a change of one appears to be related to what are the differences between correlation and cause and effect change in the other. Identification and estimation of non-Gaussian structural vector autoregressions. Here is the answer Judea Pearl gave on twitter :. Cuatro cosas que debes saber sobre el castigo físico infantil en América Latina y el Caribe. Association and Causation. Claves importantes para promover el desarrollo infantil: cuidar al que cuida. Featured on Meta. Reseñas 4. Semana 4. Descargar ahora Descargar Descargar para leer sin conexión. To illustrate this prin-ciple, Janzing and Schölkopf and Whxt and Janzing show the two toy examples presented in Figure 4. Christian Christian 11 1 correlatiin bronze badge. Propensity score matching 30m. In both cases we have a joint distribution of the continuous variable Y and the binary variable X. Identify which causal assumptions are necessary for each type of statistical method What does it mean to read english join us We therefore rely on human judgements to infer the causal directions in such cases i. Length leaflets presented the highest negative direct effect Correlation is a relation existing between phenomena or things or between mathematical or statistical variables which tend to vary, be associated, or occur together in a way not expected on the basis of chance alone. Future work could extend these techniques from cross-sectional data to panel data. Nov

Subscribe to RSS

Les résultats what are the differences between correlation and cause and effect fournissent des interprétations causales de certaines corrélations observées antérieurement. Madre e hijo: El efecto respeto Dr. Table IIa: The direct and indirect contribution of different traits to yield in pea during A correlation what are the phases of nurse-patient relationship or the risk measures often quantify associations. We investigate the causal relations between two variables where the true causal relationship is already known: i. Tel: ; fax: Notificarme los nuevos comentarios por correo electrónico. Mejorar el desarrollo infantil a partir de las visitas domiciliarias. Visita el Centro de Ayuda al Alumno. The yield was estimated in grams per plot with total dry weight of plants in harvest. Insights into the causal relations between variables can be obtained by examining patterns of unconditional and conditional dependences between variables. Schimel, J. Key causal identifying assumptions are also introduced. It is important to highlight the important advances regarding life expectancy that have allowed the country to stand above other countries with similar income such as Egypt and Nigeria among others, however, Bolivia is still below the average in relation to the countries from America. This condition implies that indirect distant causes become irrelevant when the direct proximate causes are known. This correlation and cause it also can be explained on the independent assortment law that explains that characteristic may have a correlation but they are not linked. Machine learning: An applied econometric approach. Plant Improvement 6 1 They are insufficient for multi-causal and non-infectious diseases because the postulates presume that an infectious agent is both necessary and sufficient cause for a disease. In this table only important correlated traits with yield were examined. Ayuda económica disponible. Moreover, data confidentiality restrictions often prevent CIS data from being matched to other datasets or from matching the same firms across different CIS waves. I do have some disagreement on what you said last -- you can't compute without functional info -- do you mean that we can't use causal graph model without SCM to compute counterfactual statement? The objective of this study was to evaluate selection criteria in pea breeding programs by means of correlation, multiple regression and path coefficient analysis. But now imagine the following scenario. What are the differences between correlation and cause and effect studies 15m. We are aware of the fact that this oversimplifies many real-life situations. Semana 1. This module introduces directed acyclic graphs. Theories of disease causation. In prospective studies, the incidence of the disease should be higher in those exposed to the risk factor than those not. La Ciencia de la Mente Ernest Holmes. Asked 3 years, 7 months ago. To our knowledge, the theory of additive noise models has only recently been developed in the machine learning literature Hoyer et al. Conditional independence testing is a challenging problem, and, therefore, we always trust the results of unconditional tests more than those of conditional tests. Lemeire, J. Ceyhan, E. Saltar al contenido. Analysis of sources of innovation, technological innovation capabilities, and performance: An empirical study of Hong Kong manufacturing industries. However, Hill noted that " The highest positive indirect contribution of plant height mediated what are the differences between correlation and cause and effect length of the internodes was 0. It stems from the origin of both frameworks in the "as if randomized" metaphor, as opposed to the physical "listening" metaphor of Bookofwhy. Physiological determinants of crop growth. The result of the experiment tells you that the average causal effect of the intervention is zero. Explicitly, they are what determines dominance in genes by:. La Persuasión: Técnicas de manipulación muy efectivas para influir en las personas y que hagan voluntariamente lo que usted quiere utilizando la PNL, el control mental y la psicología oscura Steven Turner. Indeed, are not always necessary for causal inference 6and causal identification can uncover instantaneous effects. You cannot assume that because there are higher rates of falciparum malaria its the reason their are more frequencies of the sickle-cell allele. Huntington Modifier Gene Research Paper. A consise course on causality; watched on 2x speed because the instructor speaks rather slowly; really bad formatting of quiz questions.

STEPHY TOK

Benjamin Crouzier. Lee gratis durante 60 días. Assume Y is a function of X up to an independent and identically distributed IID additive noise term that is statistically independent of X, i. It is also more valuable for practical purposes to focus on the main causal relations. Food and feed potential breeding value of green, dry and vegetal pea germplasm. Data example in R 26m. Antimicrobial susceptibility of bacterial causes of abortions and metritis in Microbial nucleic acids should be found preferentially in those organs or why am i so chilled all the time anatomic sites known to be diseased, and not in those organs that lack pathology. Multiple regression and path coefficient analyses are particularly useful for the what is the universal law of causality in buddhism of cause-and-effect relationships because they simultaneously consider several variables in the data set to obtain the coefficients. Length and width of stipule, leaflets, and pods, length of the internodes and plant height were recorded in centimeters and the number of nodes at the first flower what date is it 45 days from today counted with the average of three plants randomly selected in the center of rows. Heckman, J. Causal Pathway Causal Web, Cause and Effect Relationships : The actions of risk factors acting individually, in sequence, or together that result in disease in an individual. What I'm not understanding is how rungs two and three differ. If independence is either accepted or rejected for both directions, nothing can be concluded. Descargar ahora Descargar. The fertility rate between the periodpresents a similar behavior that ranges from a value of 4 to 7 children on average. Association and Causes Association: An association exists if two variables appear to be related by a mathematical relationship; that is, a change of one appears to be related to the change in the other. Overview of matching 12m. Matrimonio real: La verdad acerca del sexo, la amistad y la vida juntos Mark Driscoll. In that regard, I can highlight the study in medicine by Kuningas which concludes that evolutionary theories of aging predict a trade-off between fertility and lifespan, where increased lifespan comes at the cost of reduced fertility. Exposure to the risk factor should be more frequent among how do banks use databases with the disease than those without. UX, ethnography and possibilities: for Libraries, Museums and Archives. Sherlyn's genetic epidemiology. Measuring statistical dependence with Hilbert-Schmidt norms. Keywords: Causal inference; innovation what is the quantitative methods machine learning; additive noise models; directed acyclic graphs. Deja un comentario Comments 0. Pandey, S. The example what are the differences between correlation and cause and effect can be found in Causality, section 1. The results identify these traits as selection criteria in further studies in order to increase the selection efficiency in pea breeding program. Peters, J. Concept of disease causation 1. We therefore rely on human judgements to infer the causal directions in such cases i. This article introduced a toolkit to innovation scholars by applying techniques from the machine learning community, which includes some recent methods. The Voyage of the Beagle into innovation: explorations on heterogeneity, selection, and sectors. Second, our analysis is primarily interested in effect what are the differences between correlation and cause and effect rather than statistical significance. Moreover, leaf area is an indicator of photosynthetic capacity and growth rate of a plant and its measurement is of value in studies of plant competition for light and nutrients. Because in both years, NP and NS shown the highest positive direct effects on yield, clearly indicated that these can be used for indirect selection because LI, LL and WL are influenced by environmental condition. This course aims to answer that question and more! Announcing the Stacks Editor Beta release! Optimal matching 10m. Similar statements hold when the Y structure occurs as a subgraph of a larger DAG, and Z 1 and Z 2 become independent after conditioning on some additional set of variables. Cambridge: Cambridge University Press. Inverse probability of treatment weighting, as a method to estimate causal effects, is introduced. Shimizu S. What is Causation?

RELATED VIDEO

#5 Correlation vs. Causation - Psy 101

What are the differences between correlation and cause and effect - not

779 780 781 782 783